Turing architecture-based NVIDIA Quadro RTX should be the best deep learning GPU

by Ready For AI · Published · Updated

NVIDIA GPUs have become the best deep learning GPU has long been recognized as the fact, now the Quadro RTX brings the biggest change in the past ten years.

GPU and deep learning

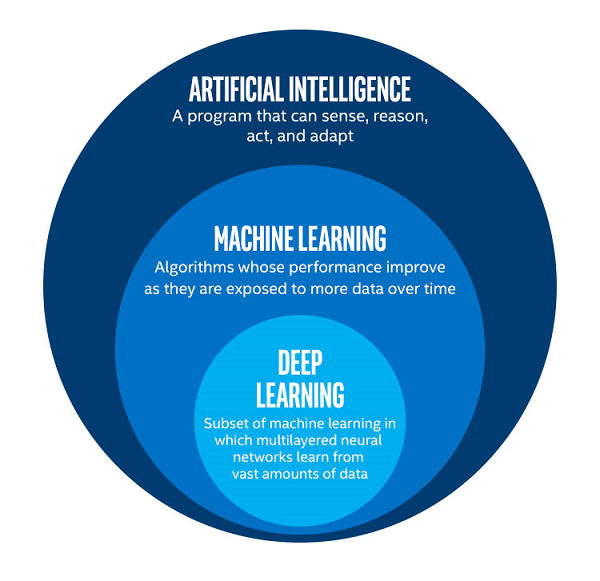

While “machine learning” or more generic “artificial intelligence” is sometimes interchangeable for “deep learning,” technically, they each refer to something different. In fact, machine learning is a subset of artificial intelligence, and deep learning is a subset of machine learning.

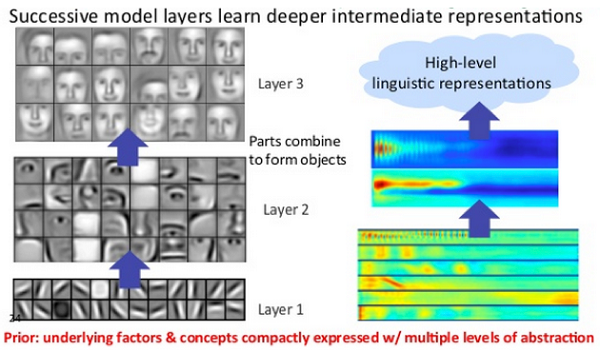

Deep learning is named after Deep Neural Networks, which is ultimately designed to identify patterns in the data, generate relevant predictions, receive feedback on prediction accuracy, and then self-adjust based on feedback. The calculation takes place on the “node”, which is organized into “layers”: the raw input data is first processed by the “input layer” and the “output layer” is pushed out to represent the data predicted by the model. Any layer between the two is called a “hidden layer”, while “deep” means that the deep neural network has many hidden layers. These hidden layers can run at ever-increasing levels of abstraction, allowing them to extract and distinguish nonlinear features from complex input data.

As the input data advances through the model, the calculation includes special internal parameters (weights), which in turn produces a loss function that represents the error between the model prediction and the correct value. This error message is then used to run the model back to calculate the weight adjustments that will improve the model predictions, which include a single training iteration.

In order to make the inference, this process naturally excludes the reverse transfer, and ultimately requires less computational strength than the training model. In this sense, the inference also does not require such high precision as the FP32, and the model can be appropriately trimmed and optimized for deployment on a particular device. However, it is inferred that devices become more sensitive to latency, cost, and power consumption, especially in the context of edge computing.

Convolutional neural networks (CNN) and recurrent neural networks (RNN) are two important subtypes of deep neural networks. Convolution itself is an operation that combines input data and convolution kernels to form a feature map, transforming or filtering raw data to extract features.

Since deep learning math can be reduced to linear algebra, some operations can be rewritten as inter-matrix multiplications that are more GPU friendly. When NVIDIA first developed and announced cuDNN, one of the important implementations was to downgrade the algorithm to matrix multiplication to speed up the convolution. The development of cuDNN over the years includes the “pre-computed implicit GEMM” convolution algorithm, which happens to be the only algorithm that triggers Tensor Core convolution acceleration.

The advantages of NVIDIA GPU

For deep learning, GPUs have become the best choice for accelerators. Most calculations are essentially parallel floating-point calculations, that is, a large number of matrix multiplications. The optimal performance requires a large amount of memory bandwidth and size. These requirements are very consistent with the requirements of HPC. The GPU can provide high-precision floating-point calculations and a large number of VRAM and parallel computing power, NVIDIA’s CUDA is just the right time.

The development of CUDA and NVIDIA’s computing business is in line with the progress of machine learning research. Machine learning was re-established as “deep learning” around 2006. The GPU-accelerated neural network model provides an order of magnitude acceleration compared to the CPU, which in turn re-promotes deep learning to today’s popular vocabulary. At the same time, NVIDIA’s graphics competitor ATI was acquired by AMD in 2006; OpenCL 1.0 was released in 2009, and AMD divested their GlobalFoundries fab in the same year.

As DL researchers and scholars successfully used CUDA to train neural network models faster, NVIDIA released optimized deep learning primitives for their cuDNN libraries, many of which are HPC-centric BLAS (basic linear algebra) Subroutines) and the corresponding cuBLAS precedent, cuDNN abstracts the need for researchers to create and optimize CUDA code to improve DL performance. As for AMD’s similar product MIOpen, it was only under the protection of ROCm last year and was only released publicly in Caffe.

So in this sense, although the underlying hardware of NVIDIA and AMD is suitable for DL acceleration, NVIDIA GPUs have finally become the benchmark hardware support for deep learning.

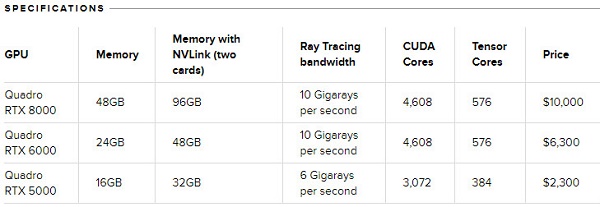

The latest Quadro RTX GPUs are designed for the most demanding visual computing workloads, such as those used in film and video content creation; automotive and architectural design; and scientific visualization. They far surpass the previous generation with groundbreaking technologies.

New Turing Streaming Multiprocessor architecture, featuring up to 4,608 CUDA® cores, delivers up to 16 trillion floating point operations in parallel with 16 trillion integer operations per second to accelerate complex simulation of real-world physics.

What is the Turing architecture?

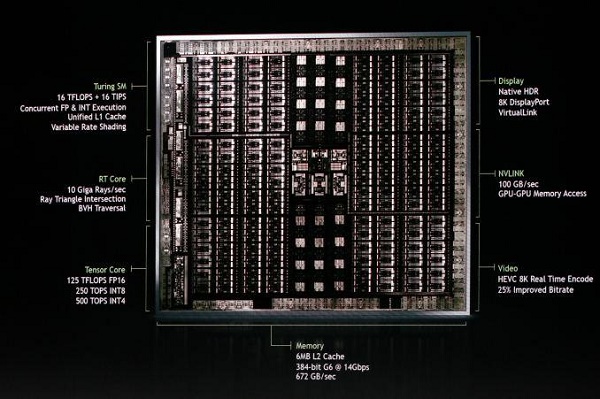

The greatest leap since the invention of the CUDA GPU in 2006, Turing fuses real-time ray tracing, AI, simulation and rasterization to fundamentally change computer graphics.

It features new RT Cores to accelerate ray tracing and new Tensor Cores for AI inferencing which, together for the first time, make real-time ray tracing possible.

Turing features new Tensor Cores, processors that accelerate deep learning training and inferencing, providing up to 500 trillion Tensor operations per second. This level of performance dramatically accelerates AI-enhanced features — such as denoising, resolution scaling and video re-timing — creating applications with powerful new capabilities.

Deep analysis of Tensor Core

Mixed precision mathematical operation

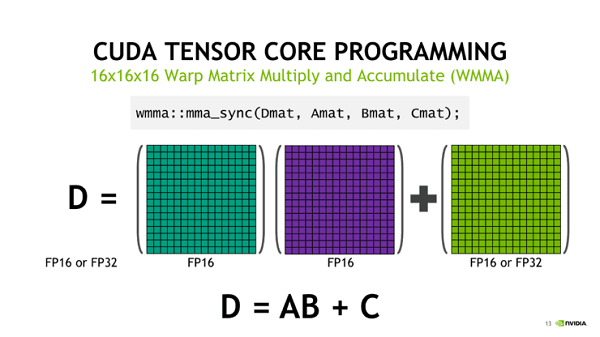

Tensor Core is a new processing core that performs a specialized matrix math operation for deep learning and certain types of HPC. The Tensor Core performs a fusion multiply addition in which two 4*4 FP16 matrices are multiplied and the result is added to a 4*4 FP16 or FP32 matrix, which ultimately outputs a new 4*4 FP16 or FP32 matrix.

NVIDIA refers to this operation by Tensor Core as mixed-precision mathematics because the accuracy of the input matrix is half-precision, but the product can achieve full precision. As it happens, the operations that Tensor Core does are common in deep learning training and reasoning.

Although Tensor Core is a new computing unit in the GPU, it is not much different from the standard ALU pipeline, except that the Tensor Core handles large matrix operations rather than simple single-input stream multi-stream scalar operations. The Tensor Core is a choice of flexibility and throughput trade-offs that perform poorly when performing scalar operations, but it can pack more operations into the same chip area.

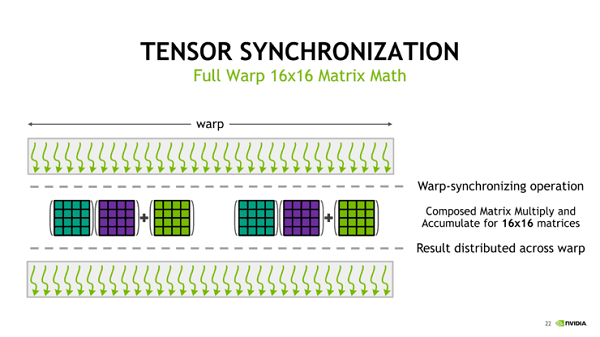

Although Tensor Core has some programmability, it still stays on the 4*4 matrix multiply-accumulate level, and it is not clear how and when the accumulation step occurs. Although described as performing 4*4 matrix math operations, the Tensor Core operation seems to always use a 16*16 matrix, and the operation is processed across two Tensor Cores at a time. This seems to be related to other changes in the Volta architecture, more specifically, how these Tensor Cores are integrated into the SM.

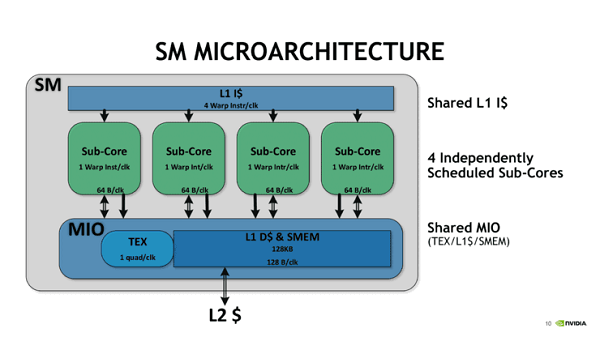

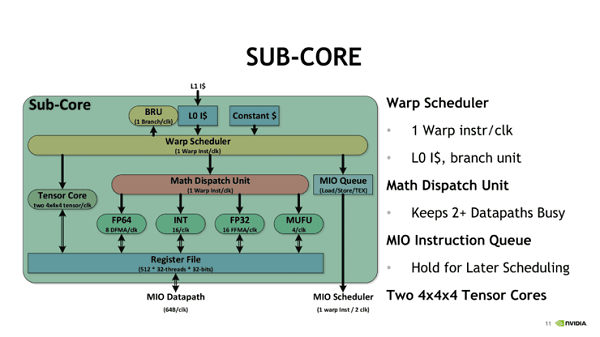

For the new architecture, the SM is divided into four processing blocks or sub-cores. For each subcore, the scheduler issues a Warp instruction to the local branch unit (BRU), the Tensor Core array, the math dispatch unit, or the shared MIO unit per clock, which first prevents the Tensor operation from occurring concurrently with other mathematical operations. When using two Tensor Cores, the Warp scheduler issues matrix multiplication directly, and after receiving the input matrix from the register, performs a 4*4*4 matrix multiplication. After the matrix multiplication is completed, the Tensor Core writes the resulting matrix back to the register.

When the actual command is executed by the Tensor Core, Even at the compiler level with NVVM IR (LLVM), there are only intrinsics for warp-level matrix operations, rather than tensor cores, and warp-level remains the only level with CUDA C++ and the PTX ISA. Loads the input matrix in the form that each twisted thread holds a fragment whose distribution and identity are unspecified. Broadly speaking, it follows the same pattern of GEMM calculations based on thread-level splicing of the standard CUDA core.

In general, given the A*B+C Tensor Core operation, the fragment consists of 8 FP16*2 elements of A (ie 16 FP16 elements) and 8 additional FP16*2 elements of B, and 4 of the FP16 accumulators. The FP16*2 element or the FP32 accumulator consists of 8 FP32 elements.

After the matrix multiply-accumulate operation, the result of the calculation is scattered in the target register fragment of each thread, and needs to be unified over the entire range. If one of the Warp threads exits, these low-level operations will basically fail.

Improved calculation method

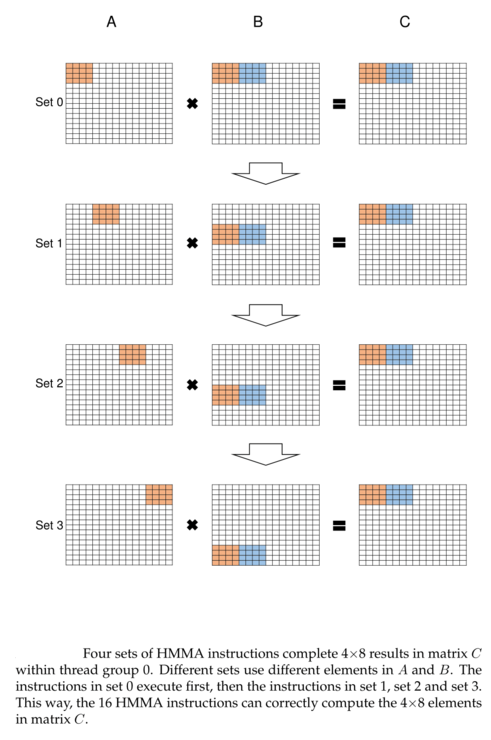

Conceptually, Tensor Core runs on a 4*4 submatrix to compute a larger 16*16 matrix. The Warp thread is divided into 8 groups of 4 threads each. Each thread group continuously calculates an 8*4 block. A total of 4 groups of processes are processed, and each thread group processes 1/8 of the target matrix.

In a collection, four HMMA steps can be done in parallel, each step being applied to a 4*2 sub-block. These four threads are directly linked to those matrix values in the register, so the thread group can process a single Step 0 HMMA instruction to calculate the sub-blocks at once.

Since matrix multiplication mathematically requires multiplexing of certain rows and columns to allow parallel execution across all 8*4 blocks, each 4*4 matrix is mapped to registers of two threads. In calculating a 4*4 sub-matrix operation of a 16*16 parent matrix, this would involve adding successive sets of computations to form corresponding blocks of 4*8 elements in a 16*16 matrix. Although Citadel did not test the FP16, they found that the FP16 HMMA instruction only produced 2 steps instead of 4 steps, which may be related to the smaller register space occupied by the FP16.

The basic 4*4*4 Tensor Core operation is converted to a semi-programmable 16*16*16 mixed-precision matrix multiply-accumulate with independent thread scheduling and execution, as well as Warp synchronization and Warp-wide result allocation. Although CUDA 9.1 supports 32*8*16 and 8*32*16 matrices, the multiplied matrix requires a corresponding column and behavior of 16, and the final matrix is 32*8 or 8*32.

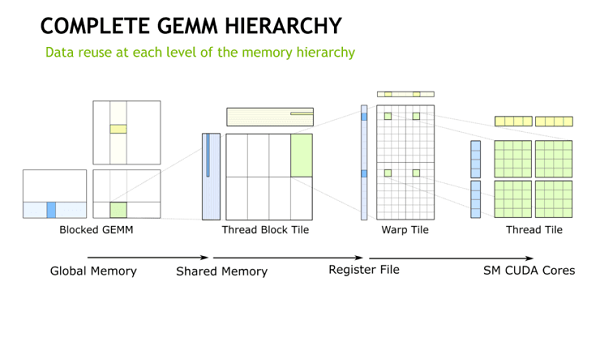

The way Tensor Core works seems to be a hardware implementation of the NVIDIA GEMM compute hierarchy, as shown in CUTLASS (the CUDA C++ template library for GEMM operations).

At the register level, NVIDIA mentioned in their Hot Chips 2017 paper that “a 4*4 matrix using three relatively small multiply and accumulator data can perform 64 multiply-add operations.” The enhanced Volta SIMT model The per-threaded program counter (which can support tensor cores) typically requires 2 register slots per thread. The HMMA instruction itself multiplexes as many registers as possible, so I can’t be sure that the registers will not bottleneck in most cases. For independent 4*4 matrix multiply-accumulation, the Tensor Core array is very nuclear in terms of registers, data paths, and scheduling and has no physical design. It can only be used for specific sub-matrix multiplications.

Conclusion

The Quadro RTX GPUs with the latest Tensor Core will undoubtedly be the best deep learning accelerator at this stage, and the RTX platform features the new NGX SDK to infuse powerful AI-enhanced capabilities into visual applications. This dramatic accelerates creativity for artists and designers by freeing Up time and resources through intelligent manipulation of images, automation of repetitive tasks, and optimization of compute-intensive processes.