Artificial Intelligence emotion recognition may still be far away

by Ready For AI · August 8, 2018

Letting robots have human-like emotions, including the realization of artificial intelligence emotion recognition, may still be an intractable problem in the long time, which is not only reflected in technology.

Can the machine recognize the true and false of a smile?

According to a survey, no matter which country or ethnic group, people can basically achieve high accuracy in recognizing the true or false smile, people can easily distinguish whether the laughter is true. Still false. For example, the “smirk boy” below:

So, since people can recognize the true and false of a smile, can the machine do it? Or, at the current level of technological development, can artificial intelligence identify human emotions through image recognition technology, and then make corresponding judgments? The answer is disappointing, it can’t.

At present, the latest applications of artificial intelligence, including image and speech recognition, have been widely used in various fields such as photo recognition, screen enhancement, and human-computer interaction. However, the current level is only limited to the level of identification and classification. It is impossible to complete deep emotional understanding and understanding through surface recognition.

Does this mean that artificial intelligence can never read human expressions and have human emotions? Perhaps the answer is not completely pessimistic.

Emotional external manifestation has certain rules

We know that people’s emotions are manifested in many ways. Expressions, language, movements, etc. can be used as vectors for human expression of emotions. Different emotions will be reflected in different forms. For example, if a person is happy, they will laugh, and both sides of the mouth will be tilted upwards, and the corners of the eyes will be slightly tilted. If they are particularly happy, they will laugh haha. This inspires us to use the expressions or actions of various emotions to train and learn the machine to achieve the purpose of recognizing emotions.

Eyeball rotation analyzes personality. A team of researchers from the University of Stuttgart in Germany, Flinders University in Australia and the University of South Australia has developed a machine learning algorithm that investigates the daily lives of 42 subjects through extensive training on the system. The eyeball is rotated and then evaluated for personality traits. The algorithm can display the communicative ability, curiosity, etc. of the individual, and can identify four of the Big Five personality.

Micro-expression analysis. Recently, MIT has used machine learning to capture subtle changes in facial expressions to measure a person’s psychological feelings. By decomposing 18 segments of video into one frame and one frame of image, the model can learn to get the emotion behind the corresponding expression. The main thing is that it is different from the dualism of traditional expression recognition, can be retrained as needed, and has a high degree of individual applicability.

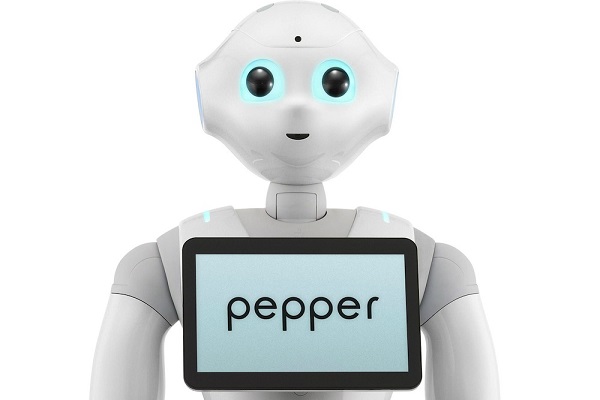

Analysis of language performance. Japan’s Softbank’s emotional robot, Pepper, has a camera that allows it to have certain expression recognition capabilities, while cloud-based speech recognition technology can be used to judge the tone of a person’s speech, thereby gaining the speaker’s emotions to achieve ” The function of the emotional robot. At the same time, the online customer service system developed by IBM can also learn to recognize human emotions hidden in grammar and typing speed, similar interactive emotion recognition artificial intelligence applications and Microsoft’s Xiao Bing.

In addition, the combination of wearable devices to obtain signs such as the pulse rate of a person will also contribute to emotional cognition. In summary, under the support of face recognition, speech recognition, sensors and various data algorithms, artificial intelligence recognition of emotions still seems to be evolving.

Reasons for low emotional recognition

Since all technologies are working hard for emotion recognition, why is the actual application still far from the level of people’s requirements?

First, technical research is independent.

Many researchers are trying to explain and experiment through the technical fields they are good at, such as some good at image recognition, some good at speech recognition, and some good at sensor data analysis. These researchers or research teams often have their own technological advantages, but there are also certain shortcomings, which form the corresponding “cask effect”.

Second, the complexity of human emotions.

For a person who is good at communicating with others, the judgment of emotions is often based on only one expression and one sentence. It is usually necessary to combine the external environment and the expressions of the front and back language or many expressions. These key factors are the basis for accurately determining the emotions of the other party.

Third, emotional changes are difficult to control.

Human beings are a few creatures that can make false expressions, as well as representatives of species with diverse mood changes. This invisibly increases the difficulty of emotional recognition, as some psychologists envision “Everyone has a little evil inside, some are just better at hiding it.”, the machine can not be judged.

Conclusion

In general, artificial intelligence emotion recognition needs to be based on a three-dimensional, multi-dimensional analysis, and the true and false judgments of the external activities exhibited by human beings can accurately describe the complex psychological activities behind it. A long way to go.

1 Response

[…] the vast expanse of non-verbal cues that lie beneath and betwixt the way that people communicate (Artificial Intelligence emotion recognition may still be far away). Meaning and emotion are as complicated and inseparable as the historical, social, and personal […]