Apple Conference 2018 is not about the new iPhone or Watch but about Apple AI

by Ready For AI · Published · Updated

The giant companies such as Google and Microsoft have completed the transformation of AI strategy. It seems that the development of Apple AI is lagging behind. Is this really true?

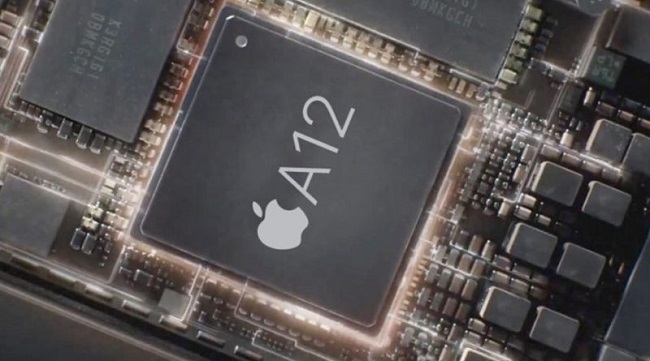

A12 Bionic chip

At the just-concluded Apple Conference 2018, Apple spent half of its time introducing an A12 Bionic smart chip, so many people will have the feeling that this is a chip launch. Remember that at Apple Conference 2017, Apple introduced the A11 Bionic chip, which Apple’s senior vice president Phil Schiller called “the most powerful smartphone chip.”

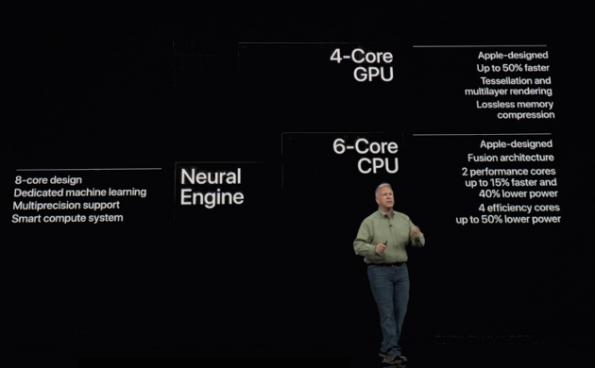

A12 Bionic uses the latest 7nm manufacturing process, equipped with 6-core CPU, 4 core GPU, CPU includes 2 performance cores and 4 performance cores, its performance core is 15% faster than A11 Bionic, 4 performance core than A11 Bionic Reduced power consumption by 50%; GPU graphics processing speed is 50% higher than A11 Bionic. Have to say, from the introduction, the performance of all aspects of the A12 Bionic chip has greatly surpassed the previous generation of products, a perfect upgrade.

In addition, the most powerful part of the A12 Bionic chip is the new Neural Engine, which was introduced by Apple when it launched the A11 Bionic chip last year. A12 Bionic will be a 2 core design with 8 cores that can support more complex machine learning models. In addition, it has an intelligent computing system that is used to properly distribute computing tasks on the CPU, GPU, and neural network engines. The neural network engine on A11 Bionic can perform 60 million calculations per second, while the neural network engine on A12 Bionic can perform 5 trillion calculations per second. The leap in this data is simply unbelievable.

Therefore, based on the new A12 Bionic chip, the camera function, video function, voice assistant, Face ID, AR on the iPhone, all of which call the CPU and GPU capabilities have risen to a whole new level.

Computer vision

Computer vision is one of the main artificial intelligence technologies. It is also the most mature technology currently used on smart phones. The main functions that can be realized are: face recognition, gesture recognition, human motion tracking, image recognition, object recognition and so on.

Real-time face recognition is extremely demanding, and Apple deliberately draws an area on the A11 Bionic chip’s neural network engine to support Face ID. Phil Schiller said that the new Neural Engine on A12 Bionic can more effectively identify parts of the face and speed up the recognition of Face ID. Since the data processing and calculations are all performed using the local A12 Bionic chip and the cloud AI program is not used, the security of the Face ID will be further improved.

Although face recognition on mobile phones itself is very technically difficult, the long-distance human motion recognition that Apple brought this time is obviously more challenging. The site brought an app called “Homecourt” that developers can use to record and track basketball matches and record scores in real time. The powerful neural network engine can track and identify human motion in real time, predict motion trajectories, analyze six motion data in real time, and even accurately determine the angle of the shot.

Smart HDR

In the new era of AI mobile phones, taking photos has become the biggest highlight of AI’s image processing capabilities, and Apple’s new products are no exception.

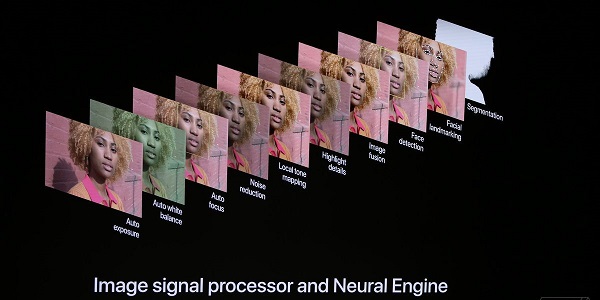

The conference introduced the new Smart HDR shooting function. HDR camera refers to the camera taking multiple photos of different exposure levels when the shutter is pressed, and finally putting them together, so that the photos can be made light or dark. Every detail can be seen more clearly.

Smart HDR also features new features such as zero shutter delay, more highlights and shadows, richer detail, and better backlighting. This is not achieved with the usual ISP+CPU, but by the ISP+ neural network engine. That is to say, every time you take a photo, the phone will perform about one trillion calculations to ensure the effect of the photo, which is really amazing.

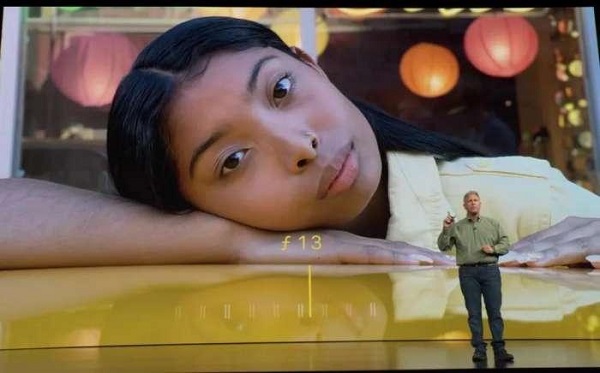

At the same time, the Smart HDR shooting function also supports post-focusing. Apple used the photos taken by the SLR camera to train the neural network engine, and finally realized the effect of taking pictures and then selecting the blurred area. From the example of the scene, the actual effect is still very satisfactory.

Ubiquitous AI application

In order to make machine learning bring a better user experience, Apple has made a lot of attempts. For example, using machine learning to recognize the text of a handwritten note on the iPad, or learning and predicting the user’s usage habits on the iPhone to make iOS more power-efficient, or automatically create a memory album in the photo app and facial recognition of the person. At the same time, Siri also uses machine learning to bring more intimate features and smoother responses, as well as some of the new features we saw on this year’s WWDC:

Smart Photo Sharing

When the user is sharing a photo, the system will find out the relevant photos, and the person appearing in the photo as the recommended object of the delivery. Then, when the other party receives the photo, the system will advise the other party to reply to the relevant photo in the phone. In this way, both parties can easily get a complete photo of the same event or event.

Siri Shortcuts

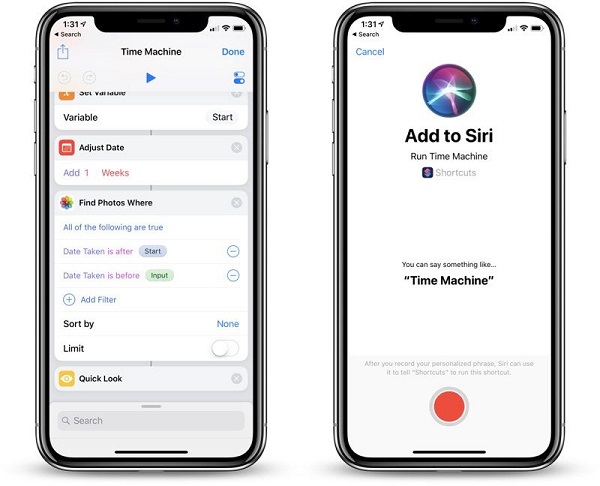

Each application can provide some shortcuts to Siri, making Siri more intelligent, convenient, and more user-friendly. For example, apply the Tile App by setting the phrase "I lost my keys" and add a Shortcuts to Siri. Then when you say the set phrase, Siri can automatically open the Tile, and directly call the function of finding the key in the Siri interface, without having to go to the application to execute.

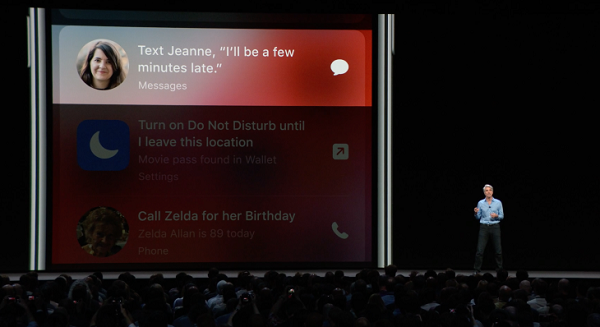

Siri Suggestion

Predict what you are going to do based on your usage behavior and give advice at the appropriate time. For example, there is a party arrangement in your schedule, but Siri finds through the positioning that the party time is approaching and you are still far away from the party. Siri will pop up a quick message to send a reminder, confirm that your friend will send a message saying that you will be a few late.

Developer ecology

Because of this extremely powerful A12 Bionic chip, Apple, which has always liked to build a closed ecosystem, is also actively developing the AI application on Apple’s platform to further strengthen Apple’s AI capabilities.

Speaking of which, I have to mention Apple’s high-performance machine learning framework: Core ML, which helps developers quickly integrate multiple machine learning models into their applications. At the press conference, the upgraded Core ML is brought into play. In addition, it can make the machine learning model run faster – while processing speed is increased by 30%, the model size can be reduced by 75%.

Core ML can help applications start up 30% faster, while power consumption is only 10%. This way, developers can use Core ML to run more complex AI models and run more smoothly. Apple also released the Create ML application, which supports the development of machine learning task models such as computer vision and natural language processing, and can also perform model training directly on the Mac.

Conclusion

In the AI era, the improvement of the algorithm puts higher requirements on the computing power of the chip. At present, Apple’s A12 BIONIC chip has become the strongest chip for mobile terminals with the help of the neural network engine. Apple also built the ecosystem of AI developers through machine learning tools such as Core ML and Create ML. I believe that in the near future, Apple will bring more amazing results in the field of AI.