Where is the boundary of Artificial Intelligence ethics?

by Ready For AI · July 29, 2018

Algorithm-based image recognition can judge the study of sexual orientation, which will be the challenge of human beings in artificial intelligence ethics.

Judging sexual orientation will become easier

If you want to understand the intimate question like a person’s sexual orientation, you may no longer need to do any verification, because artificial intelligence already can be easily used to accurately identify it. I know that this news sounds may make you very uncomfortable, but it is still true. Not long ago, two researchers at Stanford University developed a neural network that can detect a person’s sexual orientation by studying a facial image.

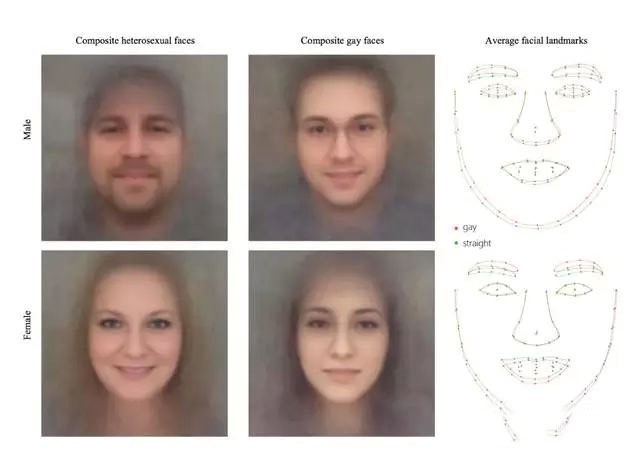

The researchers trained a neural network of more than 35,000 facial images, evenly distributed between homosexuals and heterosexuals, and the algorithm’s tracking involved genetic or hormone-related features: prenatal hormone theory (PHT). This theory specifically states that in the uterus male sex hormones are responsible for sexual differentiation, and it is the main driving force for homosexual orientation in future life. The study also pointed out that these specific androgens will affect the key features of the face to some extent, which means that certain facial features may be related to sexual orientation.

The study found that lesbians or gays tend to have “atypical gender characteristics,” meaning that gay men tend to be feminine, while lesbians trend towards masculinity. In addition, the researchers found that gay men usually have a narrower chin, a longer nose, and a larger forehead than non-gay, while lesbians have larger chins and smaller foreheads.

The test randomly selected two images, a gay man and a heterosexual man, and the accuracy of the machine’s selection of the subject’s sexual orientation is as high as 80%. And if the five images of the same person are simultaneously compared, the accuracy can be as high as 91%. However, it has a relatively low prediction accuracy for women, with an accuracy of 71% in the case of only one image, and an accuracy of 83% in five images.

All of this makes people feel uncomfortable, AI’s recognition of human sexual orientation is undoubtedly related to the privacy part. This suggests that billions of public data stored in social networks and government databases will likely be used for sexual orientation identification without their permission, which is also likely to cause panic among most people.

Distinguishable person relationship

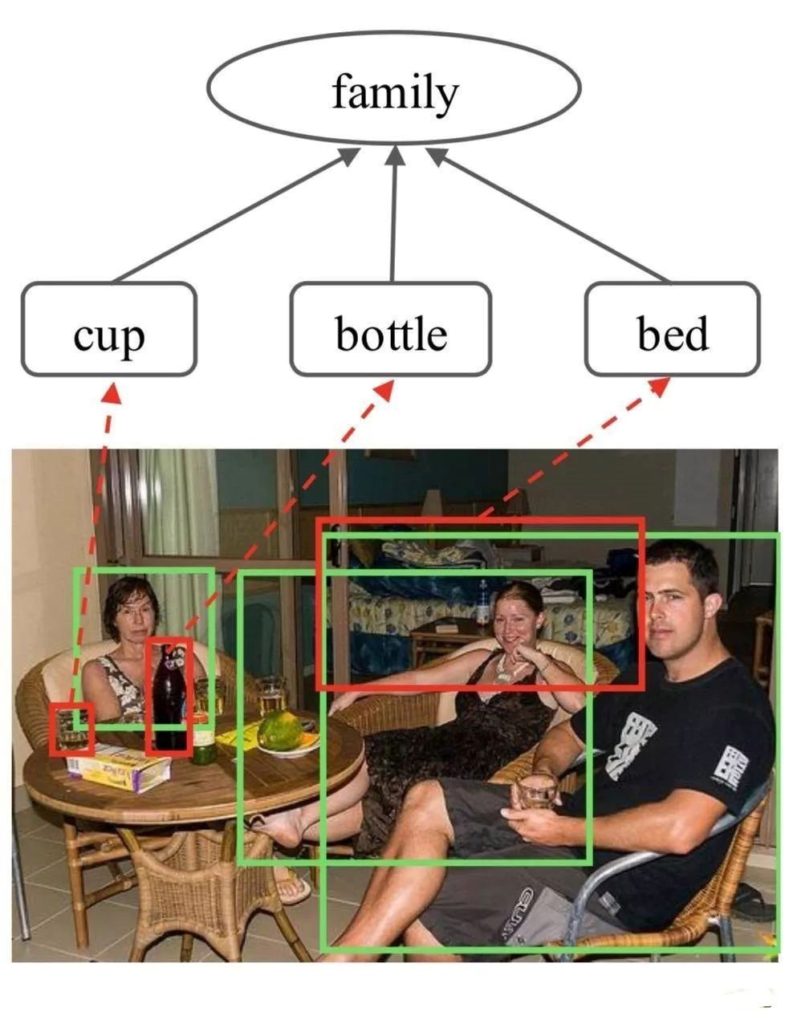

Research on identifying the relationship of people in images continues, and the results of a team from a university suggest that character relationships can be identified through data sets, like this:

All of this is based on the Graph Reasoning Model (GRM) trained by the researchers, which combines the Gated Graph Neural Network (GGNN) to deal with social relationships. Based on this, artificial intelligence can recognize the relationship between the three in the picture, initialize the relational node according to the characteristics of the character area on the map. Then search the semantic object in the image with the pre-trained Faster-RCNN detector and extract its features, Initializing the corresponding object node. It will discover the interaction between the human and the context object by propagating the node message, and adaptively selecting the node with the largest amount of information by using a corresponding mechanism to facilitate the identification by measuring the importance of each object node. However, in actual performance, this AI recognition program does not perform as expected, this may sound like everyone can be more assured.

Where is the boundary of artificial intelligence ethics?

“New Yorker” once had such a cover: the robot has become the protagonist of the earth, and humans can only kneel on the ground to accept the charity of the robot. Every new technology will cause everyone’s concern, but in the past, it is more an extension of human physical strength, and if it is an extension of brain power and privacy, this concern will be more serious. In short, I think there are several aspects to be concerned about the boundaries of artificial intelligence ethics.

- The conclusion of face recognition alone is too sloppy

The Journal of Personality and Social Psychology, a study of Stanford, pointed out that deep neural networks are more accurate than humans in image detection of sexual orientation, and that research involves establishing a computer model to identify researchers as homosexuals the “atypical” feature.

The researchers wrote, “We believe that people’s facial features contain more sexual orientation characteristics that the human brain cannot judge. According to a picture, the classifier can distinguish 81% of male homosexuals and 74% of female homosexuals, and this completely exceeds the accuracy of the human brain’s judgment.”

However, in the specific application process, it is not so “reliable” to rely solely on facial construction for recognition. Technology itself does not understand people’s sexual orientation, the so-called technology is just a pattern that identifies the similarities between homosexual avatars in the photo gallery.

- The problem of algorithmic discrimination still cannot be ignored

Algorithmic discrimination has always been a major problem in the process of artificial intelligence application. For example, searching for “CEO” on Google, none of the search results are women, and none of them are Asians, this is a potential technical bias. Obviously, machine learning is like human learning, they extract and absorb the normal form of social structure from culture. Therefore, these algorithms will also expand and continue the rules that we humans presuppose for them, and these rules will always reflect the normal form of society, not all objective situations.

Earlier this year, computer scientists from the University of Bath and Princeton used a Lenovo-like test similar to the IAT (Inner Association Test) to detect the underlying propensity of the algorithm and found that even the algorithm would be biased against race and gender. Even Google’s translation is hard to escape, and the algorithm “discovers” and “learns” the prejudice of social conventions. When in a particular locale, some of the original neutral terms, if the context has a specific adjective (neutral), it translates the neutral word into “he” or “her”.

Today’s artificial intelligence is still basically limited to accomplishing specific tasks. Sometimes, many practical applications are not simple like choose either this or that. Moreover, humans still have moral dilemmas in many choices. If the decision is given to the algorithm at this time, then The risks that exist in this are obviously not to be ignored.

- Data usage is a key point

The reason why companies are not willing to protect user privacy in all aspects is not only because of the difficulty of technology, but also because of the lack of certain commercial driving force, so the actual situation is to balance the relationship between the two is the key point. In fact, European countries have gone a long way in pinning the giants’ destruction of user privacy, this is reflected in the case of class actions in which they confronted giants such as Facebook and Google in these years.

- In August 2014, Facebook was sued by 60,000 people in Europe. An Austrian privacy activist launched a large-scale class action lawsuit against Facebook’s European subsidiary, accusing Facebook of violating European data protection laws.

- Facebook was questioned to participate in the United States National Security Agency’s “Prism” project collects personal data used by the public Internet.

Obviously, users are increasingly aware of their data protection, and they are working hard to protect their data while also enhancing privacy protection. However, at present, how to control the use of user privacy by artificial intelligence is still a problem that cannot be perfectly balanced, because artificial intelligence programs pay less attention to morality than humans.