Imitating the human olfactory system to make AI smarter

by Ready For AI · October 9, 2018

Artificial intelligence is mimicking humans in many ways, and recently some teams have imitated the human olfactory system to make AI smarter.

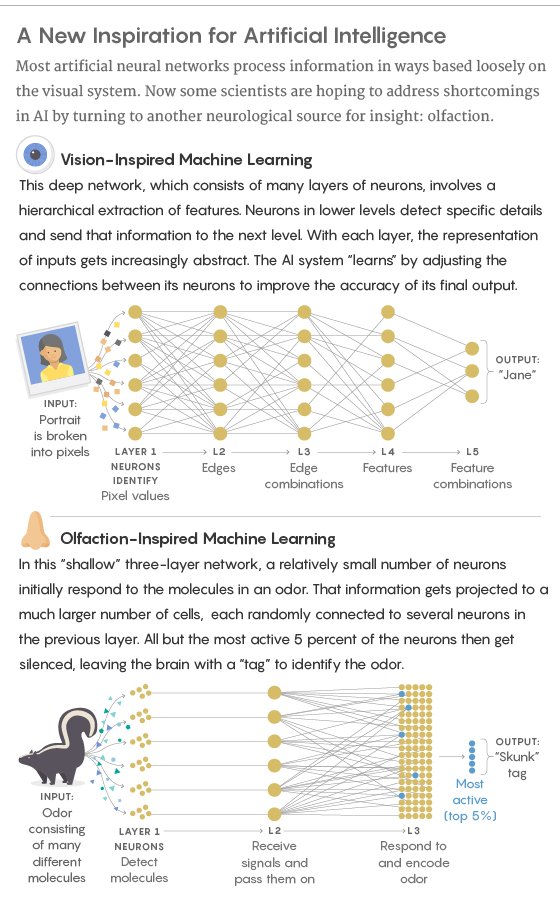

Current status in the field of computer vision

Artificial intelligence systems include artificial neural networks inspired by the connection of neurons and nervous systems, they have been able to perform tasks with known constraints well. In addition, these systems often need to worked cooperate with a large amount of computing power and a large number of training datasets. With these properties, they have achieved excellent performance in the field of Go, used to detect the presence of vehicles in the image, successfully distinguishing different visual objects such as cats and dogs. But their intelligence is still very large gap with the human brain.

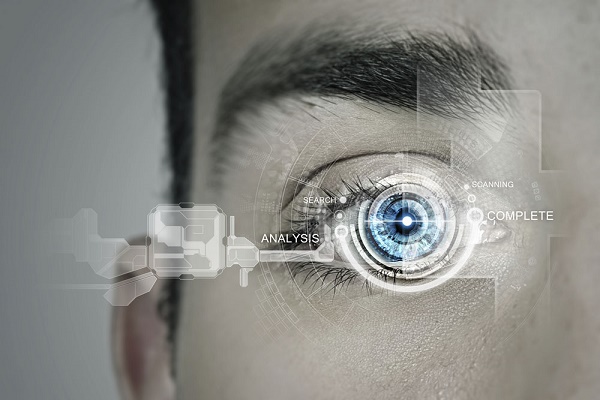

Neuroscientists David Hubel and Torsten Wiesel discovered in the 1950s and 1960s that specific neurons in the visual system have a one-to-one correspondence with specific pixel locations in the retina. With this major discovery also enabled them to successfully acquire Nobel prize.

At present, even the most advanced machine learning techniques rely at least to some extent on the visual system simulation structure: tiered ingestion based on the information. When the visual layer receives sensory data, it first selects smaller but well-defined features, including edges, textures, colors, and other elements related to spatial mapping. When visual information is transmitted through cortical neurons, details such as edges, textures, and colors are combined to form a more abstract input expression. For example, if the object is a human face, and then the facial features will display their identity as Jane.

Deep neural networks operate in a similar layered manner and bring a far-reaching revolution to machine learning and artificial intelligence research. To teach these networks to recognize objects such as faces, researchers will pass in thousands of sample images to the network. The system will strengthen or weaken the connections between the artificial neurons to more accurately determine the more abstract face graphics formed by a particular set of pixels. With the support of ample samples, it is able to recognize face objects contained in new images, as well as face patterns in scenes that have never been seen before.

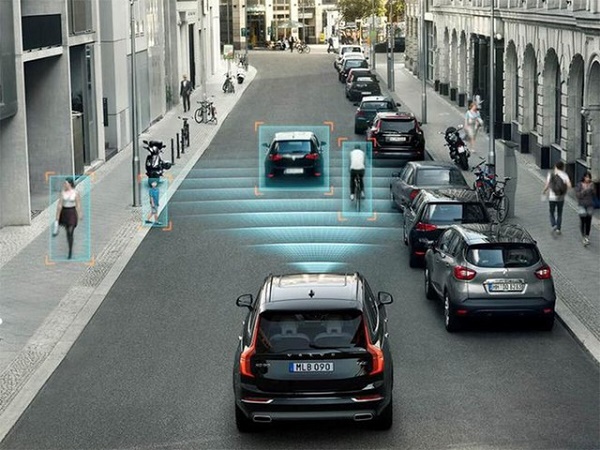

However, for the currently popular self-driving car, when the car is based on the new environment for navigation the surrounding environment will always change and full of noise and ambiguity factors, then the deep learning technology inspired by the visual system may not function properly. In fact, the scatter method based on vision may not solve the problem well. For this problem Adam Marblestone, a biophysicist at the Massachusetts Institute of Technology said that visual processing represents an insight-based acquisition capability based on contingency at a fundamental level, it is a “historical luck”. It is this luck that has given scientists the most mature systems in the field of artificial intelligence, the image-based machine learning application.

Imitating the human olfactory system

Konard Kording

“But they’re pretty bad at creating music or writing short stories. Clearly, today’s artificial intelligence systems face significant challenges in reasoning in a meaningful way.”

To overcome these limitations, some research groups are trying to find new answers from the brain. Even more amazing is that some of these researchers have chosen a seemingly unlikely starting point: smell. Scientists hope to better understand how organisms deal with chemical information and discover relevant coding strategies that seem to be expected to solve artificial intelligence problems. In addition, there is a striking similarity between the olfactory loop and other more complex brain regions, while the latter may lead us to build more powerful intelligent machines.

Saket Navlakha

“Every type of stimulus is handled differently. For example, vision and smell use completely different signal types. Therefore, the brain may use a variety of different strategies to handle different types of data. I think except In addition to studying how the vision system works, there are many other topics that researchers need to explore."

Saket Navlakha and other researchers have found that the insect’s olfactory loop may bring some experience worthy of reference. In the 1990s, Columbia University biologists Linda Buck and Richard Axel discovered the genes used to treat odor receptors, marking the official start of olfactory research. Since then, olfactory system research has become very distinctive and has led more researchers to explore how flies and other insects treat odors. Some of these scientists believe that this kind of research can easily solve many common computing challenges that the vision system cannot handle. Because this is a limited system, it can be characterized in a relatively complete way.

"Antimap" mechanism and sparse network

There is a fundamental difference between olfaction and vision on many levels. Smell is an unstructured message that has no edges, in other words, we can’t group these specific objects in space. Smell is a mixture of deep different compositions, and it is difficult for us to classify them as similar or different from each other, so researchers often do not know which features should be paid attention to during exploration. In the end, the researchers used the “Antimap” mechanism proposed by the neurologist of the Salk Institute, Charles Stevens.

In a mapping system like the visual cortex, the location of the neuron will indicate the type of information it carries. But in the reflection system of the olfactory cortex, this is not the case. Instead, information will be distributed throughout the system, and reading of relevant data requires some very low number of neurons to be sampled. More specifically, researchers need to implement reverse mapping through sparse information representation in high-dimensional space.

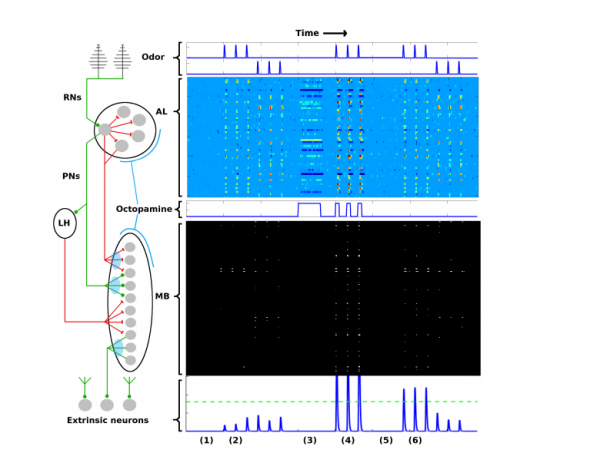

With the same olfactory loop as Drosophila, the researchers used 50 projection neurons that were sensitive to different molecules to receive inputs. A single scent triggers multiple different neurons, but each neuron represents a different odor. Thereafter, the information is randomly projected into 200 Kenyon cells, which identify the corresponding specific odor by encoding. This will result in a 40x scale expansion model to ensure that the neural response pattern achieves odor differentiation in a more sensitive manner.

After the fly’s olfactory loop is constructed, it needs to find a practical way to identify different odors with non-overlapping neurons. This model achieves this through data thinning of the 2000 Kenyon cells, only about 100 (5% of the total number) cells have high activity for specific odors (other less active cells are in a silent state) and provide a unique label for each odor.

As researchers explore the olfactory system in recent they developed algorithms to determine the practical impact of higher-dimensional random embedding and sparsity on computational efficiency. Thomas Nowotny of the University of Sussex in the United Kingdom and Ramón Huerta of the University of California, San Diego even established another connection to the machine learning model and named it “support vector machine”.

Thomas Nowotny

Natural and artificial systems deal with information in a form that is equivalent, and both use random organization and dimensional extension to effectively express complex data. In this regard, artificial intelligence and biological evolution achieve independent integration on the same type of solution.

With this connection, Nowotny and his colleagues continue to explore the relationship between smell and machine learning technology hoping to find a deeper connection between them. In 2009, the insect olfactory model they designed to identify odors has succeeded in recognizing handwritten numbers. But for a long time later the researchers did not put much effort into following up on these findings. Until recently, some scientists have re-examined the biological structure of the sense of smell, hoping to gain insight into how to improve the performance of machine learning on certain specific issues with the help of this discovery.

Fast learning ability of "olfactory system"

Now the research team has repeated the experiment proposed by Nowotny: based on the moth’s olfactory system and compared it with the traditional machine learning model. In the case of fewer than 20 samples, the moth-based model can better recognize handwritten numbers; but as the training data increases, other models show more powerful and accurate judgment.

In terms of learning speed, the effect of the olfactory system seems to be better, because in this case, the purpose of “learning” is no longer to find the best features and expressions for a particular task. Instead, its basic goal will be simplified, and the goal becomes to identify which set of random features are related to the correct results and which are not.

In fact, the olfactory strategy is equivalent to introducing some of the most basic and primitive concepts into the model, just like putting some general understanding of the real world into our brains. After that, the structure itself can perform some innate tasks that are simple and do not require instruction.

One of the most striking examples comes from the research that Navlakha made in his laboratory last year. Navlakha created an olfactor-based similarity exploration algorithm and applied it to the processing of image datasets. He and his team found that their algorithms are far superior to traditional non-biological methods in their performance, and sometimes their dimensionality reduction can reach 2-3 times.

The experimental results of Nowotny and Navlakha show that basic untrained networks are already available for performing classification calculations and other similar tasks. Systems built in such coding schemes can also perform subsequent learning tasks more easily. In the work currently under review, Navlakha used a similar, olfactory-based approach to novelty detection: after training with thousands of similar objects it successfully identifying new related objects.

Nowotny is studying how to use the olfactory system to treat the mixture and it is a challenge that machine learning technology faces in this type of application. Nowotny and his team found that people do not deliberately separate the odors when sniffing, the odor recognition between coffee and croissants is done in a very rapid alternating manner.

This insight is equally important for artificial intelligence technology. For example, it is often difficult to separate multiple simultaneous conversations in a noisy environment at a reception. If there are multiple talkers in the room, then artificial intelligence can process it by switching the sound signal to a very small time window. And if the system recognizes a voice from a certain talker, it may try to suppress input from other talkers. Through such an alternation, the neural network will be able to smoothly analyze the conversation content.

Entering the era of insect robots

The “insect robot” created by Charles Delahunt of the University of Washington and his colleague J. Nathan Kutz further contributed to the progress of this research. They used their moth-based model output results as input to machine learning algorithms and thereby achieved significant improvements in the system’s ability to classify images.

There are also some researchers who want to use olfactory research to determine how to coordinate multiple forms of learning in a deeper network. In addition to implementing an olfactory-based architecture, another important issue in this area is how to clearly define system inputs. In a paper published in the journal Science Advances, a group led by the neurologist Bityana Sharpee of the Salk Institute is trying to describe the odors, and they have successfully mapped the scent to inside the hyperbolic space.

Sharpee and her colleagues hope to define odor molecules based on frequencies found in nature and combine odor molecules to construct maps, including observing which molecules tend to work together and which molecules maintain distinct separations. They found that this is like mapping a city point to Earth, the mapping of odor molecules is in a hyperbolic space and the sphere of negative curvature is saddle-shaped.

Conclusion

For the time being, most of the research results of the olfactory system are still in the theoretical stage. The work of Navlakha and Delahunt must be further extended to more complex machine learning problems to determine if the olfactory model is capable of functioning.

To the excitement of the researchers, the olfactory system is structurally strikingly similar to other areas of the brains of many species, especially the hippocampus associated with memory and navigation and the cerebellum responsible for motion control. Olfactory is an ancient system whose history can be traced back to the biological experience of bacterial organisms, and all organisms use some form of olfactory to explore the surrounding environment.

The olfactory loop is expected to be the starting point for the interpretation of more complex learning algorithms and computational schemes that attempt to understand the workings of the hippocampus and cerebellum, and may even lead us to apply relevant insights to the field of artificial intelligence. Researchers have begun to focus on cognitive processes such as attention and memory, hoping to find ways to improve current machine learning architectures and mechanisms. In this regard, Imitating the human olfactory system has the potential to provide a simpler way to establish these connections.

OlfRecog mind-module documentation page now has a link to this article.